I vividly remember the excitement of seeing the first Outfunnel trials started by people I didn’t know. After more than six months of planning and building, we had the first tangible proof that what we were building, mattered.

Due to old habits and my background at Pipedrive, I wanted to see all my signups in a CRM from the get-go. This gave me a visual overview of the health of our signups, the ability to do basic funnel/pipeline reporting as well as the ability to integrate our customer data with various marketing tools.

This is what our onboarding pipeline stages looked like (and still looks like):

- Signed up, no activity – zero engagement post signup

- Connected CRM – has connected CRM but not configured any features

- Set up at least 1 feature – had played with the product but without real usage

- Live data moving (aha moment) – real data synced at least once

- Actively using – data actively being synced over multiple days, real value from product

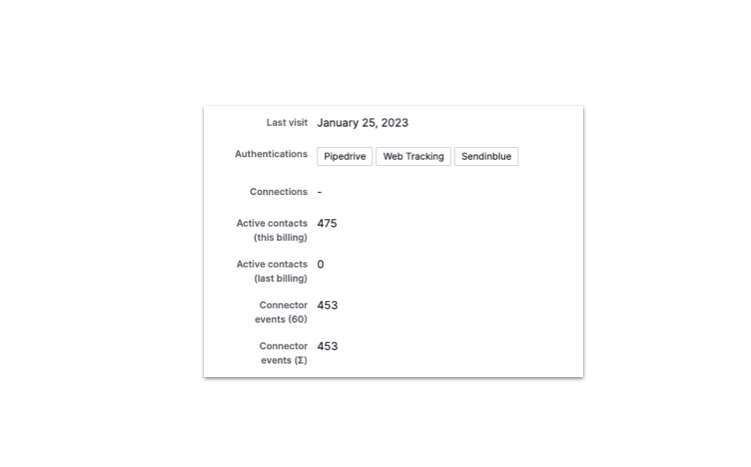

We also had a homegrown backoffice which had more detailed information about the users but it also had the problem that all homegrown admin tools have: data was stuck in there, inaccessible to other tools.

Which was fine, given our low volume of trial signups.

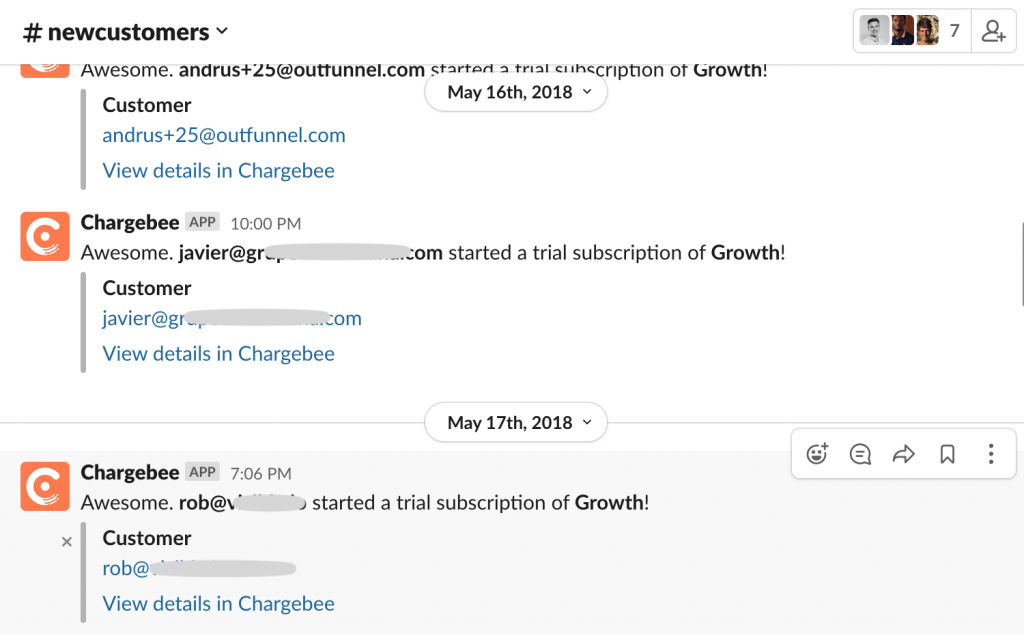

Stage 1. Low volume of signups, product usage data manually copied to CRM, outreach to almost everyone

In the early days, we were lucky to get 5 signups per day, so I reviewed each of the signups by hand and moved them along the pipeline accordingly.

I wanted to get a sense of how actively trial users were using the product. This information was available in the aforementioned backoffice tool, so I had to manually copy it to Pipedrive.

Our backoffice tool displayed total usage stats, but it was impossible to see whether their usage had occurred only during one specific day or evenly throughout the trial period. So I had to copy-paste data several times for each user in order to track progress during the trial.

I also tried to get feedback and input from as many signups as possible. I had the habit of opening their website to get a better sense of who was on the other end, and I would aim to write each new user.

It was a somewhat tedious but illuminating period in building the company, as well as defining our product-led sales process down the line.

Epiphany: not all users are equal, time to start automating

First, it took a while to get anyone to respond.

Then, a picture started to emerge from replies, product usage, googling names of trialists, and calls.

In the first three stages of our onboarding journey/pipeline, people got stuck for similar reasons.

- No need. They had started the 14-day free trial on a whim, without a clear need or intent to buy. Nothing we could have said or done would have turned them into active users.

- No time. There was a need and our app looked promising, but there was no time to get things properly set up. Sending reminders during and after the trial got some of them back on track, and stating that this would take less than 15 mins was a nice extra incentive.

- No clarity. There was a need of some sort, but they couldn’t figure out how Outfunnel solved that need. Reiterating our core features, unique value proposition and better explanations got some of them to change their minds.

- Issues. No app is perfect, and this is especially true of our first version. The initial experience was far from ideal — there were bugs, infra hiccups, and UX dead ends, and unless we had completely messed up (which happened a couple of times), we could win some of those signups back.

If you look at these four reasons, you can address all of them by:

- reminding that you exist

- stating your value proposition and highlighting key customer benefit

- showing how setup takes 5-15 minutes, not hours and

- offering human contact i.e. scheduling a call.

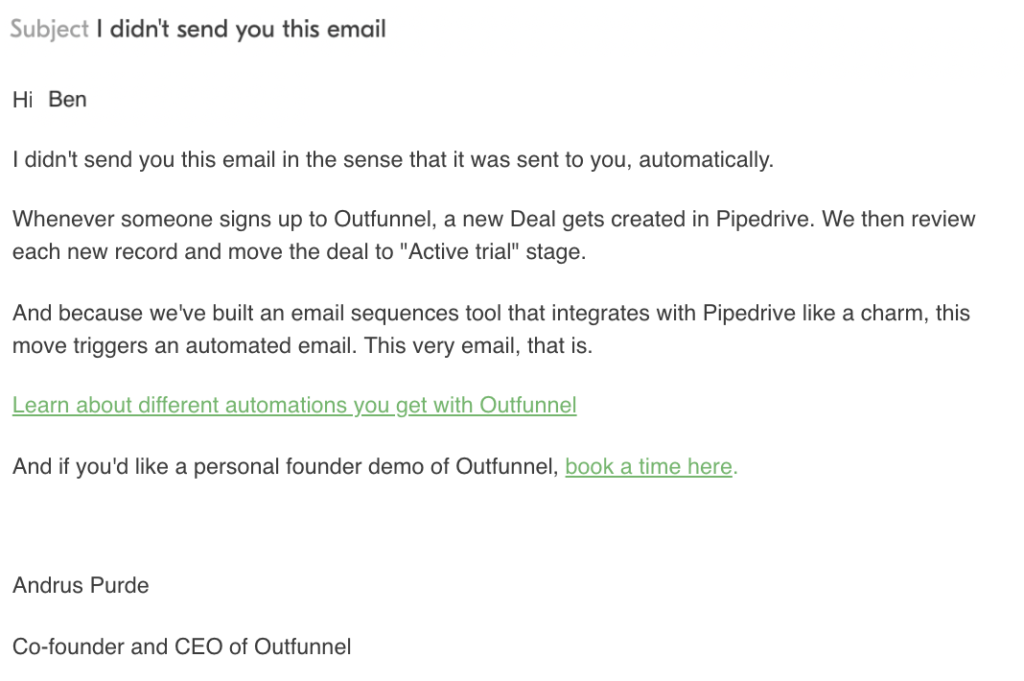

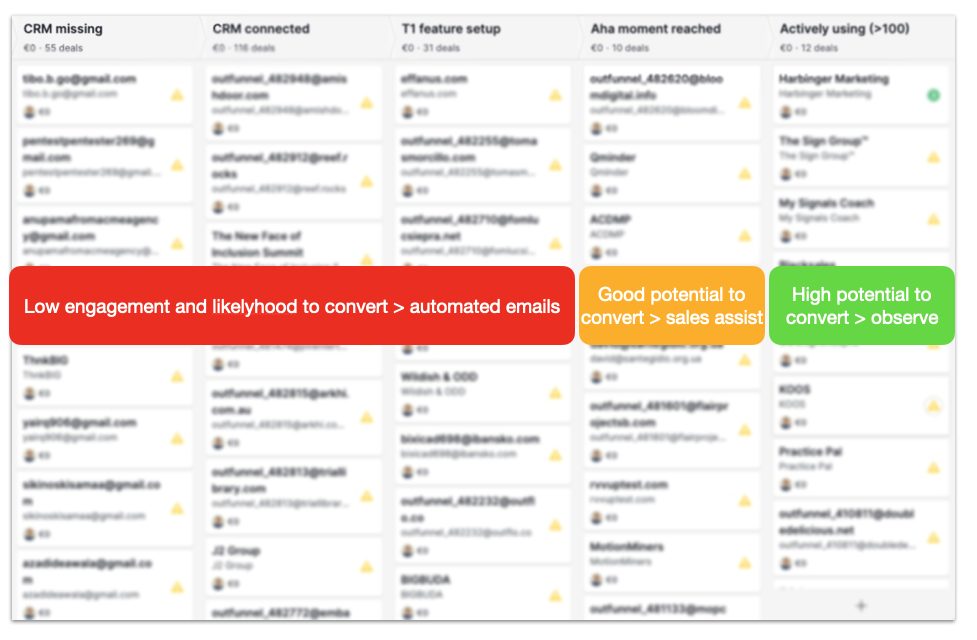

Stage 2. Time to automate onboarding for low-engagement trialists

And so we decided to stop looking at individual signups in the early stages of our onboarding pipeline and send all of them similar user onboarding emails, addressing the issues we had identified with the fixes we had seen to work.

We still reviewed usage for each of the signups manually, but we stopped sending personalized emails to every single trial user manually.

Here’s an email that signups received the day after they had signed up:

Our volume of signups had grown to 100-150 per month, so while it still took time to review usage stats for each a couple of times during the trial, we saved the time of sending “manual” emails to the majority of signups.

In essence, we saved more than half of the time needed for user onboarding. And this was a HUGE time win for us at the time, a true first win in our product-led approach journey.

Conversion to active trial users and trial-to-paid conversion remained the same, or even improved as we had more time to deal with active trial users.

Stage 3: Going full-automatic with user onboarding pipeline management, personal contact only where it made sense

Once this process had run smoothly for a couple of months, my colleague Markus wanted to take things further.

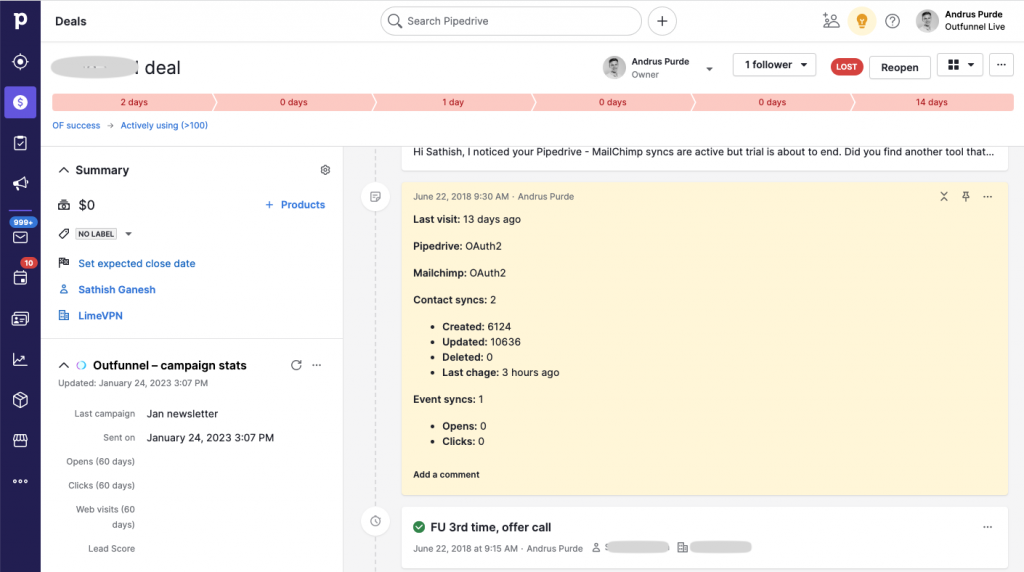

Pipedrive had released a workflow automation functionality which meant that it could auto-move deals in our onboarding pipeline based on any input. So our dev team built a basic API connection between our database and Pipedrive, sending usage data about events sent or synced to the CRM.

And we then set up a Pipedrive workflow automation to move each signup to the correct stage based on usage.

Our onboarding was now fully automated:

- Each signup sent to Pipedrive from Chargebee via Zapier

- App usage data sent from our database to Pipedrive via a custom integration, using Pipedrive’s API

- Each signup record moved to the correct stage automatically based on product usage, using Pipedrive’s workflow automation

- Automated emails to people from Outfunnel and/or Mailchimp, with content and frequency based on product data synced to the CRM

All in all, this saved 80% if not 90% time spent on onboarding while maintaining or even increasing product engagement and conversion to paid because we had more time to spend with promising signups.

And please note that automating onboarding didn’t mean that we became inaccessible to new trial users. Quite the opposite. We knew human contact was important, and we actively encouraged responding to emails and/or scheduling a call via Calendly.

Stage 4: what’s missing, and what’s next

So over time, we’ve “rolled our own” simple product-led sales playbook. Trial users with low engagement get automated emails with messages that are relevant.

And we’re seeing users with modest engagement and high potential in a particular stage in our pipeline. When we want to reach out to them we don’t need to open any other tabs or tools to see what they have or haven’t done in the app. Key usage stats and key events in the user journey have been synced to our CRM.

All of this was for the cost of some zaps and a few days of development time. So far, so good.

Two things are missing/annoying though.

First, whenever the product changes meaningfully, for example when we add a new major feature that is relevant to onboarding, we’ll need to create a development task for syncing usage data for that particular feature. This means that we’d usually need to wait for some days or weeks before we see new product events or properties in our CRM.

This also means that we’re losing some developer time to internal workflows instead of creating customer value.

Second, we’re already experiencing that syncing over the raw engagement data is good enough for simple use cases but limiting for more advanced workflows. Things like:

- Seeing progress and trends of feature usage (not only syncing over cumulative usage stats but adding the last 7 days or last 30 days separately too)

- More customization options for the volume of key events. For example, seeing the first time a feature has been used or a colleague invited is highly relevant in the CRM, but the 36th time this has been done is more noise than signal.

- The ability to combine different product events and usage properties into holistic lead scores. Defining product-qualified leads, if you will.

So that’s next for us.